What is the 3-2-1 backup rule?

The 3-2-1 backup rule has long been the gold standard for data protection. Its appeal was its simplicity — keep three copies of your data, store them on two different media and ensure one copy is off-site. For years, it offered a practical, reliable approach at a time when backups were mostly local and threats were far less complex.

But that was then.

Today’s threat landscape is drastically different. Cyberattacks like ransomware have started targeting backup infrastructure along with the production data, aiming to eliminate every path to recovery. Add to that the shift toward hybrid IT environments, always-on systems and cloud-first operations, and the 3-2-1 model begins to show its limits. What once worked for simpler infrastructures now struggles to protect against the scale, speed and sophistication of modern threats.

In this blog, we’ll unpack the 3-2-1 backup strategy, explore why it became a trusted baseline and examine its limitations today. We’ll also look at how it’s evolving into stronger models like 3-2-1-1-0, which are designed to close the gaps that modern threats exploit. Finally, we’ll also discuss how business continuity and disaster recovery (BCDR) solutions go beyond the basics to deliver true resilience across complex IT environments.

What is the 3-2-1 rule for backups?

The 3-2-1 backup rule is a time-tested strategy for data protection. It recommends keeping three copies of your data, stored on two different types of media, with one copy kept off-site. For years, it served as the go-to model for minimizing data loss, whether from accidental deletion, hardware failure or local disasters. Its strength lies in its simplicity: create redundancy across formats and locations to ensure recoverability.

The model became an industry standard because it aligned well with how IT environments operated in the past. Workloads were largely on-prem, threats were limited in scale and recovery times were less critical than they are today. In that context, the 3-2-1 rule delivered consistent and dependable protection.

Let’s break it down:

3 – Maintain three copies of your data

This means keeping your primary production data and at least two backup copies. The goal is to reduce the risk of total loss. If one copy is corrupted or lost, the others act as a safety net. Traditionally, this would be a live copy on your workstation or server, a backup on local storage and a third copy elsewhere.

Redundancy is crucial. Even the most secure primary system can fail. Having multiple versions of your data significantly improves your ability to recover without major disruption.

2 – Keep backups on two different types of media

Storing all backups on the same kind of media introduces a single point of failure. Using two different types of storage — like an external hard drive and a cloud backup —adds resilience. If one fails due to hardware issues, compatibility problems or malware, the other remains accessible.

In traditional setups, this might have involved using a network-attached storage (NAS) device for one backup and magnetic tape for another. Today, the mix could include local disk, cloud storage or immutable backup appliances. The goal is to diversify so that no one technology failure can put all your data at risk.

1 – Keep one backup copy off-site

Local copies are vulnerable to site-wide disasters, such as fires, floods, theft or even ransomware attacks that spread across the network. Keeping at least one copy in a separate geographic location ensures recoverability even when the primary site is compromised.

Off-site copies like cloud-based backups give you options during recovery and reduce your dependency on a single location or infrastructure.

What are the shortcomings of the 3-2-1 backup model?

The 3-2-1 backup rule has served IT teams well for decades. However, as infrastructures grow more complex and threats become more sophisticated, the model no longer covers all the bases. Ransomware now directly targets backups. Cloud-first environments add new layers of complexity, and businesses expect faster, more reliable recovery than ever before.

While 3-2-1 still offers a strong foundation, relying on it alone in today’s environment leaves critical gaps. Here’s where it falls short:

Vulnerability to ransomware and cyberthreats

Modern ransomware attacks don’t stop at production data. Attackers now go after backup environments, encrypting or deleting backups to block recovery and increase leverage. If backups are stored online, on the same network or lack isolation, they’re just as exposed as the production environment.

Worse yet, if ransomware infects a system before it’s detected, the encrypted or corrupted files may get backed up automatically. That means you’re preserving the damage, making recovery almost impossible. Without critical additional layers like immutability or air-gapped storage, backups themselves become a risk.

No emphasis on backup integrity and recoverability

The 3-2-1 rule tells you where and how to store copies, but it does not say whether those copies actually work when you need them.

That’s a serious problem. Unverified backups often fail to restore during critical moments. Without regular testing and validation, you’re relying on a false sense of security. If your backups can’t restore cleanly, they’re as good as no backup at all.

Non-reflective of modern cloud and SaaS adoption

The original 3-2-1 model predates widespread cloud computing and SaaS adoption. It wasn’t designed with cloud-native workloads, virtual machines or applications like Microsoft 365 and Google Workspace in mind.

Most SaaS platforms don’t include robust native backups or long-term retention. If you don’t explicitly back up that data yourself, it may be unrecoverable. Following the 3-2-1 rule without adapting it for cloud and SaaS services can leave huge parts of your environment unprotected.

Lack of focus on recovery objectives and business continuity

3-2-1 is all about redundancy. However, it doesn’t address how quickly or completely you can recover.

Recovery time objectives (RTOs) and recovery point objectives (RPOs) are critical metrics for business continuity. RTO is the maximum acceptable amount of time it should take to restore systems after a disruption. RPO defines how much data loss is acceptable, measured in time between backups. The 3-2-1 model doesn’t account for these performance metrics, which are critical to keep operations moving during a disruption.

Want to know what an outage could mean for your business — or your clients’? Use our Recovery Time & Downtime Cost Calculator to find out what’s really at stake and how fast you need to bounce back.

Fig 1: Total cost of downtime

How has the 3-2-1 backup strategy evolved?

As cyberthreats grow more targeted and infrastructure becomes more distributed, the traditional 3-2-1 model has evolved to fill the gaps it once left open. These modern backup strategies go beyond the core ideas of redundancy and geographic separation to add immutability, verification and layered resilience to meet today’s IT and security demands.

Let’s look at how the 3-2-1 rule has been extended into stronger, more flexible models.

3-2-1-1 backup

The 3-2-1-1 strategy builds on the original model by adding a critical extra layer: one copy of data that is either immutable or stored offline.

This means the added backup copy cannot be modified or deleted, even if attackers breach your systems. Whether it’s stored on air-gapped hardware or in immutable cloud storage, this layer protects your backups from ransomware encryption or insider threats. It’s a simple but powerful enhancement that makes recovery more reliable in the face of modern cyberattacks.

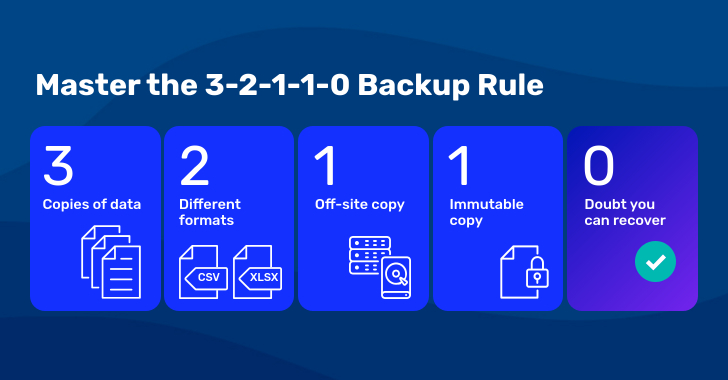

3-2-1-1-0 backup

The 3-2-1-1-0 model takes things a step further. Along with an immutable or offline copy, it emphasizes zero backup errors. The “0” represents automated verification and testing to ensure your backups are intact and recoverable.

This variation ensures every copy works when it matters most. This is ideal for organizations where reliability, accuracy and recovery speed are critical.

Want to go deeper? Read our blog on the 3-2-1-1-0 backup rule and see how it’s changing the game for modern backup strategies.

Fig 2: The 3-2-1-1-0 backup strategy

3-2-2 backup

The 3-2-2 model introduces another layer of resilience by keeping two copies of your data off-site, often in different cloud environments or geographically distant data centers.

This approach minimizes dependency on a single off-site backup. If one location experiences downtime or a cyberthreat, the other remains available. It’s an option for organizations that need high availability across all their distributed systems.

4-3-2 backup

Designed for high-compliance, high-uptime environments, the 4-3-2 model adds even more redundancy. It includes four copies of your data stored across three separate locations, two of which are off-site.

This strategy supports strict regulatory frameworks and mission-critical systems where downtime or data loss is not an option. While more complex to manage, it provides robust protection for businesses that can’t afford any risks.

How do BCDR solutions address problems with 3-2-1 backup?

While the 3-2-1 rule outlines a solid foundation for storing and structuring backups, it doesn’t address the full scope of recovery. That’s where modern business continuity and disaster recovery (BCDR) platforms come in. These solutions combine backup, verification and recovery into a single, integrated system, helping businesses recover quickly, reliably and securely with minimal disruption.

Immutable backup storage

Backups are only useful if they remain untouched until needed. Immutable backups protect data from being altered or deleted, whether due to ransomware, insider threats or accidental errors.

With immutability, backup copies are locked from changes once written. This ensures you always have a clean recovery point, even if your production environment is compromised. It’s a vital last line of defense against today’s most dangerous threats.

Automated verification of backups

One of the biggest risks in backup strategies is discovering, too late, that a backup failed or can’t be restored.

BCDR platforms eliminate that risk by automatically verifying every backup. These built-in checks ensure backups are complete, consistent and restorable, giving teams confidence that recovery will work when it matters most.

Built-in hybrid cloud redundancy

Modern BCDR platforms replicate data both locally and in the cloud. This hybrid approach delivers the best of both worlds: rapid on-site recovery for day-to-day incidents and off-site protection against major outages, disasters or data center failures.

The process is automated, reducing the need for manual effort while ensuring that redundancy is always up to date.

Frequent backups with instant recovery

Continuous data protection is now the expectation. BCDR solutions support tight RTOs and RPOs, reducing downtime, minimizing data loss and keeping critical systems available when disruptions happen. With high-frequency, automated backups and instant virtualization, businesses can get back online within minutes after a disruption.

Go from backup to resilience with Datto BCDR

Traditional backup strategies were designed to create data copies. But today, real resilience means going further and ensuring the business can bounce back quickly, securely and without compromise. That’s where backup must evolve into full business continuity.

Datto BCDR helps MSPs and IT teams do exactly that. It delivers verified, immutable and instantly recoverable backups — all within a single platform — so you’re not just storing data, you’re staying operational no matter what happens.

Here’s how Datto makes it possible:

Hybrid redundancy: Datto stores your data both locally and in the secure Datto Cloud. Backups are first saved to purpose-built local appliances, then replicated to the cloud, creating fast access for local recovery and off-site protection for larger events.

The Datto Cloud is built for resilience, featuring:

- AES-256 encryption for data at rest and in transit

- Two-factor authentication (2FA) to restrict access

- Immutable storage that blocks unauthorized edits or deletions

- Geo-distributed protection for redundancy and compliance

Together, these capabilities ensure that backup copies remain secure, compliant and recovery-ready.

Ransomware resilience: Datto doesn’t just protect your backups, but it also actively monitors for ransomware. Each backup is scanned for signs of encryption or malicious changes, helping identify threats early and ensuring clean restore points are always available.

This combination of immutability and threat detection strengthens your ability to recover without reintroducing infected data.

Automated screenshot and application verification: Every Datto backup is automatically tested and verified. The platform runs application-level checks and provides visual proof (screenshots) that systems can boot and recover correctly.

No guessing. No surprises. You know your backups will work because Datto proves it.

Instant virtualization, locally or in the cloud: When every minute counts, Datto enables instant virtualization, whether on a local appliance or in the Datto Cloud. Spin up full systems within minutes, not hours, and restore operations without waiting for full data recovery.

This keeps your business moving, even during major disruptions.

Want to see how Datto helps MSPs and businesses achieve end-to-end business continuity? Explore the Datto BCDR platform to learn more.

Looking to build a comprehensive business continuity and disaster recovery strategy for your business? Download the Ultimate Guide to BCDR to walk through the key steps to becoming recovery-ready. It’s built for IT teams and MSPs who need to keep systems running, no matter what.